Abstract

Multi-finger grasping relies on high quality training data, which is hard to obtain: human data is hard to transfer and synthetic data relies on simplifying assumptions that reduce grasp quality. By making grasp simulation differentiable, and contact dynamics amenable to gradient-based optimization, we accelerate the search for high-quality grasps with fewer limiting assumptions. We present Grasp’D-1M: a large-scale dataset for multi-finger robotic grasping, synthesized with Fast- Grasp’D, a novel differentiable grasping simulator. Grasp’D- 1M contains one million training examples for three robotic hands (three, four and five-fingered), each with multimodal visual inputs (RGB+depth+segmentation, available in mono and stereo). Grasp synthesis with Fast-Grasp’D is 10x faster than GraspIt! [1] and 20x faster than the prior Grasp’D differentiable simulator [2]. Generated grasps are more stable and contact-rich than GraspIt! grasps, regardless of the distance threshold used for contact generation. We validate the usefulness of our dataset by retraining an existing vision-based grasping pipeline [3] on Grasp’D-1M, and showing a dramatic increase in model performance, predicting grasps with 30% more contact, a 33% higher epsilon metric, and 35% lower simulated displacement.

[1] A. T. Miller and P. K. Allen, “Graspit! a versatile simulator for robotic grasping,” IEEE Robotics & Automation Magazine, 2004.

[2] D. Turpin, L. Wang, E. Heiden, Y.-C. Chen, M. Macklin, S. Tsogkas, S. Dickinson, and A. Garg, “Grasp’d: Differentiable contact-rich

grasp synthesis for multi-fingered hands,” arXiv preprint arXiv:2208.12250, 2022.

[3] J. Lundell, F. Verdoja, and V. Kyrki, “DDGC: Generative deep dexterous grasping in clutter,” IEEE Robotics and Automation Letters, 2021.

Demo

Fast-Grasp’D

Graspit!

Method

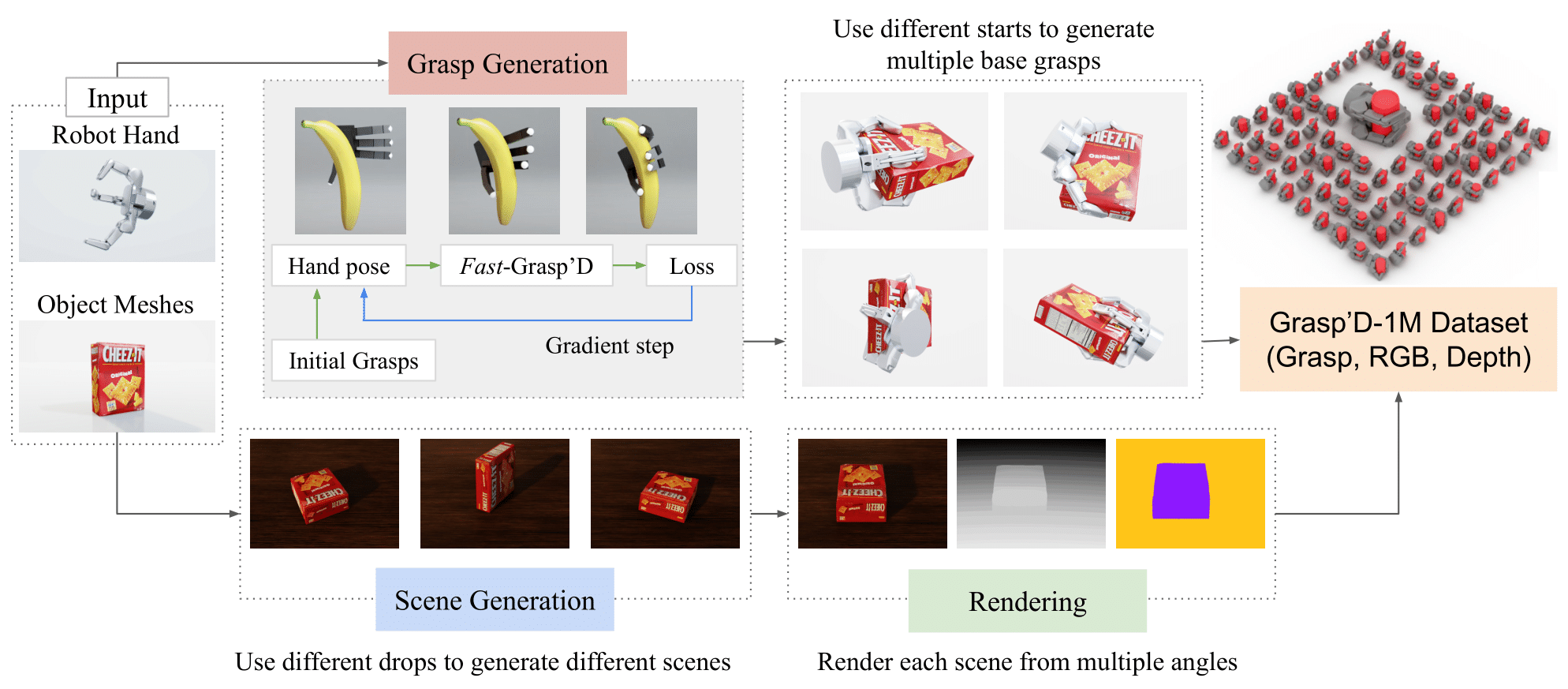

Our grasp synthesis pipeline. Our grasp synthesis pipeline generates the Grasp’D-1M dataset of one million unique grasps in three stages. (1) Grasp generation: For any provided (robot hand, object) pair, we generate a set of base grasps by gradient descent over an objective computed by Fast-Grasp’D, our fast and differentiable grasping simulator. (2) Scene generation: We simulate multiple drops of each object onto a table to create scenes with different object poses and transfer base grasps to these scenes. (3) Rendering: Finally, we render each scene (RGB, depth, segmentation, 2D/3D bounding boxes in mono+stereo) from multiple camera angles.

BibTeX

@InProceedings{turpin2023fastgraspd,

author = {Dylan Turpin and Tao Zhong and Shutong Zhang and Guanglei Zhu and Eric Heiden and Miles Macklin and Stavros Tsogkas and Sven Dickinson and Animesh Garg},

title = {Fast-Grasp'D: Dexterous Multi-finger Grasp Generation Through Differentiable Simulation},

booktitle = {ICRA},

year = {2023},

}